NVIDIA's AI 'Foundation Agent' Transforms Industry Dynamics, Unveiled by Dr. Jim Fan

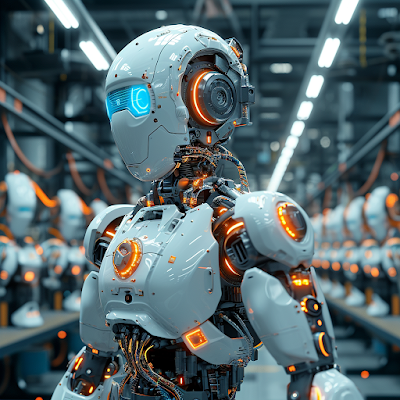

1.1. Multi-Skill Adaptation: Unlike specialized AI like AlphaGo, the Foundation Agent is designed to perform a wide range of tasks, far beyond single-task capabilities.

1.2. Diverse Embodiment Control: The agent can control various forms, from robots to digital avatars, demonstrating adaptability to different physical and digital bodies.

1.3. Reality Mastery: Capable of operating across numerous simulated and real-world environments, adapting its learning to each unique setting.

2. Compressed Time Learning in AI: Accelerated learning process in simulations, allowing AI to undergo extensive training in a fraction of the time required in real-world scenarios.

2.1. Accelerated Simulation Training: AI agents undergo intensive training in simulations at speeds much faster than real-time, akin to 'Matrix'-style learning.

2.2. Application to Diverse Scenarios: This method is applicable for a wide range of tasks, from physical activities to complex problem-solving.

2.3. Real-World Application Potential: Skills learned in these accelerated environments are expected to translate effectively into real-world applications.

3. Voyager Project: An AI agent developed for Minecraft, showcasing the ability for continuous learning and skill acquisition without human intervention.

3.1. Skill Development in Gaming: Voyager can autonomously explore, mine, craft, and fight in Minecraft, demonstrating a significant advancement in AI's capability to engage in complex gaming environments.

3.2. Self-Improvement Mechanism: Incorporates self-reflection to learn from actions and improve skills, using feedback from the game environment.

3.3. Lifelong Learning: Demonstrates the potential for AI agents to engage in perpetual learning and skill development, mirroring human-like curiosity and adaptability.

4. MetaMorph Project: A foundation model that controls thousands of robots with different configurations, developed at Stanford.

4.1. Diverse Robotic Control: Capable of operating robots with varied kinematic characteristics, indicating a significant leap towards versatile physical autonomy.

4.2. Vocabulary for Robotics: Uses a unique language model approach where robot bodies are described as sentences, allowing MetaMorph to write out motor controls.

4.3. Future Potential: Envisions expansion to control diverse forms like humanoid robots and drones, suggesting a path towards highly adaptable physical AI systems.

5. IsaacSim for Accelerated Learning: NVIDIA's simulation platform that dramatically speeds up the learning process for AI agents.

5.1. Intense Training Simulation: Enables AI to undergo years of training within days, akin to scenarios depicted in science fiction like "The Matrix."

5.2. Photorealistic Simulation: Can render detailed environments to train vision models, bridging the gap between virtual and real-world perceptions.

5.3. Generalization to Physical World: The concept that mastering numerous simulations could allow AI to adapt to the real world, suggesting a future where AI could seamlessly transition between virtual and physical realities.

6. Foundation Agent as a Unified Model: An AI model designed to operate across various realities, both virtual and physical.

6.1. Versatility Across Realities: Aims to function in any environment, showcasing adaptability akin to AI agents in movies like "Wall-E" and "Star Wars."

6.2. Task and Embodiment Inputs: Accepts prompts for different tasks and embodiments, and outputs actions, suggesting a highly flexible and responsive AI system.

6.3. Grand Challenge in AI: The development of such a Foundation Agent represents a significant milestone in AI research, pointing towards a future of comprehensive autonomous systems.

7. Coding as Action in AI: Voyager uses coding as a method of action in Minecraft, converting 3D world elements into textual representations for interaction.

7.1. Textual Representation in Gaming: Utilizes Minecraft's JavaScript API to convert game elements into text, allowing Voyager to interact with the game environment through coding.

7.2. Self-Reflection and Skill Enhancement: Incorporates a mechanism for self-reflection and error correction, improving its coding abilities and interaction with the game.

7.3. Recursive Capability Building: Demonstrates the ability to build its capabilities recursively, storing successful codes in a skill library for future use.

8. MetaMorph's Robot Vocabulary: MetaMorph uses a unique language model approach for diverse robotic control.

8.1. Descriptive Language for Robotics: Employs a special vocabulary to describe robot parts, making each robot body akin to a sentence in this language.

8.2. Transformer Application in Robotics: Applies transformer models, similar to ChatGPT, but for generating motor controls instead of text.

8.3. Potential for Generalized Robot Control: The approach hints at future expansions where MetaMorph could control a vast array of robotic forms, from humanoid robots to drones.

9. IsaacSim's Accelerated AI Training: NVIDIA's IsaacSim platform significantly accelerates AI learning and skill acquisition.

9.1. Rapid Skill Development in Simulation: Simulates intense training environments where AI can acquire years of skills in a matter of days.

9.2. Photorealism and Computer Vision Training: Uses photorealistic rendering to train AI in computer vision, enhancing its perception capabilities.

9.3. Procedural World Generation: Capable of generating infinite world variations, enabling the AI to encounter and adapt to diverse scenarios.

10. Foundation Agent as an AI Paradigm Shift: Envisioned as a single AI model capable of functioning in any environment and reality.

10.1. Unprecedented Versatility and Adaptability: Designed to operate in varied realities, whether physical or virtual, echoing the versatility seen in science fiction AI.

10.2. Input-Action Output Framework: Takes embodiment and task prompts as input, outputs actions, mirroring the flexibility and responsiveness of human-like intelligence.

10.3. The Future of Autonomous Systems: Represents a significant milestone towards the creation of comprehensive, adaptive, and autonomous AI systems.

1. This article is augmented by my custom AI assistant.

2. FOLLOW ME: https://twitter.com/dmitristern

Comments

Post a Comment